In April, the Computing Community Consortium (CCC) commissioned members of the computer architecture research community to generate a short report to help guide strategic thinking in this space. The effort aimed to complement and synthesize other recent documents, including the CCC’s Advancing Computer Architecture Research (ACAR) visioning reports and a study by the National Academies. Today, the CCC is releasing the resultant community white paper, 21st Century Computer Architecture:

Information and communication technology (ICT) is transforming our world, including healthcare, education, science, commerce, government, defense, and entertainment. It is hard to remember that 20 years ago the first step in information search involved a trip to the library, 10 years ago social networks were mostly physical, and 5 years ago “tweets” came from cartoon characters.

Importantly, much evidence suggests that ICT innovation is accelerating with many compelling visions moving from science fiction toward reality. Appendix A both touches upon these visions and seeks to distill their attributes. Future visions include personalized medicine to target care and drugs to an individual, sophisticated social network analysis of potential terrorist threats to aid homeland security, and telepresence to reduce the greenhouse gases spent on commuting. Future applications will increasingly require processing on large, heterogeneous data sets (“Big Data”), using distributed designs, working within form-factor constraints, and reconciling rapid deployment with efficient operation [more following the link…].

Two key – but often invisible – enablers for past ICT innovation have been semiconductor technology and computer architecture. Semiconductor innovation has repeatedly provided more transistors (Moore’s Law) for roughly constant power and cost per chip (Dennard Scaling). Computer architects took these rapid transistor budget increases and discovered innovative techniques to scale processor performance and mitigate memory system losses. The combined effect of technology and architecture has provided ICT innovators with exponential performance growth at near constant cost.

Because most technology and computer architecture innovations were (intentionally) invisible to higher layers, application and other software developers could reap the benefits of this progress without engaging in it. Higher performance has both made more computationally demanding applications feasible (e.g., virtual assistants, computer vision) and made less demanding applications easier to develop by enabling higher-level programming abstractions (e.g., scripting languages and reusable components). Improvements in computer system cost-effectiveness enabled value creation that could never have been imagined by the field’s founders (e.g., distributed web search sufficiently inexpensive so as to be covered by advertising links).

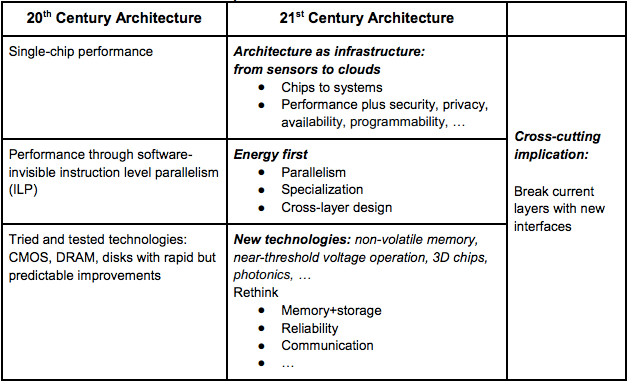

The wide benefits of computer performance growth are clear. Recently, Danowitz et al. [“CPU DB: Recording Microprocessor History,” CACM 04/2012] apportioned computer performance growth roughly equally between technology and architecture, with architecture credited with ~80× improvement since 1985. As semiconductor technology approaches its “end-of-the-road” (see below), computer architecture will need to play an increasing role in enabling future ICT innovation. But instead of asking, “How can I make my chip run faster?” architects must now ask, “How can I enable the 21st century infrastructure, from sensors to clouds, adding value from performance to privacy, but without the benefit of near-perfect technology scaling?” The challenges are many, but with appropriate investment, opportunities abound. Underlying these opportunities is a common theme that future architecture innovations will require the engagement of and investments from innovators in other ICT layers …

The authors go on to describe in detail the inflection point we are seeing in ICT as well as the opportunities we as a community have in the years and decades ahead, including key research directions.

We encourage all of you to take a look at the white paper — and to share your thoughts in the space below.

And on behalf of the CCC, we thank our colleagues in the computer architecture research community for producing a clear, thoughtful, and compelling report in very short order! (The names of all contributors appear on the final page of the report. Special kudos to Mark Hill of the University of Wisconsin – Madison, who did an extraordinary job of chairing the effort.)

(Contributed by Erwin Gianchandani, CCC Director, and Ed Lazowska, CCC Council Chair)

I think the most pressing need is for architectures that support secure

computing. A lot of work was done on this in the 1970s at MIT based on

the Multics experience, but it seems it was all forgotten? One example

is Cooperation of Mutually Suspicious Subsystems in a Computer Utility

by Michael D. Schroeder

September 1972.

http://publications.csail.mit.edu/lcs/pubs/pdf/MIT-LCS-TR-104.pdf

I’m

not saying the above is what should be done, but rather we should

revive the whole area of research so that hardware can help enforce

security even in the face of buggy software.