A couple months ago, I blogged about David Ferrucci’s excellent keynote at this year’s Federated Computing Research Conference (FCRC) in San Jose, CA — noting how Ferrucci stepped through the creation of Watson, from conception of the “Jeopardy!” challenge in 2004 to the supercomputer’s nationally televised victory earlier this year.

Well, now, ACM has made Ferrucci’s talk — along with the rest of this year’s FCRC plenaries — available through its Digital Library free of cost. Simply click here and create a free profile to either download or stream the 40-minute presentation.

And read the original summary of Ferrucci’s talk after the jump…

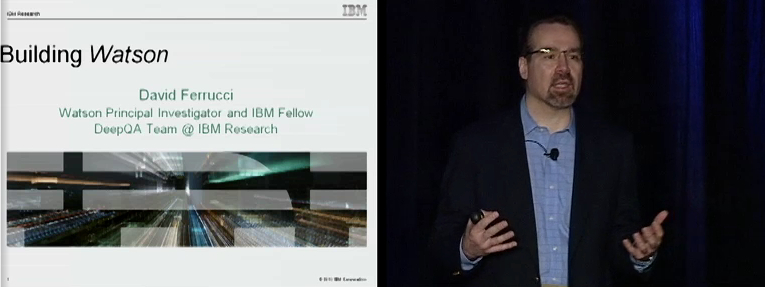

![David Ferrucci, IBM [image courtesy IBM] David Ferrucci, IBM [image courtesy IBM]](https://cccblog.org/wp-content/uploads/2011/06/img-video-david-ferruci-300x261.jpg) David Ferrucci’s official title is “IBM Fellow and Leader of the Semantic Analysis and Integration Department at IBM’s T.J. Watson Research Center.” But to the world, he’s the genius behind Watson, the question-answering supercomputer system that bested two humans in a nationally televised broadcast of the popular game show Jeopardy!earlier this year. And not just any two humans, but the two very best players in the show’s 27-year history. On Monday, Ferrucci delivered a fantastic keynote at the ACM’s 2011 Federated Computing Research Conference in San Jose, CA.

David Ferrucci’s official title is “IBM Fellow and Leader of the Semantic Analysis and Integration Department at IBM’s T.J. Watson Research Center.” But to the world, he’s the genius behind Watson, the question-answering supercomputer system that bested two humans in a nationally televised broadcast of the popular game show Jeopardy!earlier this year. And not just any two humans, but the two very best players in the show’s 27-year history. On Monday, Ferrucci delivered a fantastic keynote at the ACM’s 2011 Federated Computing Research Conference in San Jose, CA.

Ferrucci walked the audience — nearly 2,000 computer scientists from around the country — through the creation of Watson, from its initial conception in 2004 to its nationally televised victory this past February.

“The story goes,” he began, “that an IBM vice president was dining at a restaurant” when, suddenly, everyone around him got up and rushed toward a TV. “It turns out everyone was watching Jeopardy!” because Ken Jennings — one of Watson’s two competitors — was winning yet another game in the midst of his record-breaking streak of 74 consecutive victories. The IBM VP wondered if a computer could compete on Jeopardy!, Ferrucci said, and the idea was shopped around the company’s divisions for a couple years before it arrived on Ferrucci’s desk.

Ferrucci described his interest in the Jeopardy! “grand challenge” from the start, noting how it required balancing computer programs that are natively explicit, fast, and exciting in their calculations over numbers and symbols with natural language, which is implicit, inherently contextual, and often imprecise. Moreover, it constituted a “compelling and notable way to drive and measure technology of automatic question answering along five key dimensions: broad, open domain; complex language; high precision; accurate confidence; and high speed.”

Ferrucci’s team began by looking at over 25,000 randomly sampled Jeopardy! clues, classifying them into about 2,500 distinct types. What they found was a very long tail distribution: the most frequent type occurred only three percent of the time.

This finding led to several principles that guided their approach:

- Specific large, hand-crafted models won’t cut it — they’re too slow, too brittle, too biased; and there’s a need to acquire and analyze information from as-is knowledge sources (by and large, large quantities of natural language content).

- Intelligence must be derived from many diverse methods — many diverse algorithms (no single one to solve the problem; each one reacting to different weaknesses) must be used; the relative impact of many overlapping methods must be learned; and different levels of certainty must be combined among algorithms and answers.

- Massive parallelism is a key enabler.

Because Watson had to be completely contained — it couldn’t be connected to the Internet since the human contestants did not have that luxury — Ferrucci and his team pursued automatic learning by reading volumes of text, parsing sentences (into nouns, verbs, tenses, etc.) to construct synthetic frames and, eventually, semantic frames. And they eventually evaluated possibilities and the corresponding evidence: many candidate answers (CAs) are generated from many different searches, with each one evaluated according to different dimensions of evidence.

For some Jeopardy! categories and questions, Ferrucci and his team introduced temporal and geospatial reasoning, as well as statistical paraphrasing. For others (e.g., “Rhyme Time,” a particularly common category as avid Jeopardy! viewers surely know), they applied decomposition and synthesis, solving subproblems in parallel. And for still others, they utilized “in-category learning,” i.e., Watson attempts to infer the type of thing being asked from the previous question(s).

Ferrucci and his team ultimately designed a system that generates and scores many hypotheses using a combination of thousands of natural language processing, information retrieval, machine learning, and reasoning algorithms. These gather, evaluate, weigh, and balance different types of evidence to deliver the answer with the best support the system can find. In short:

- There are multiple interpretations of a question, each with hundreds of sources, hundreds of possible answers, thousands of pieces of evidence, and hundreds of thousands of deep analysis algorithms. And all of these are balanced and combined.

- A final confidence is calculated and a threshold for answering must be exceeded. Importantly, this threshold is shifted during the game. If Watson is ahead, then the confidence threshold is raised. If Watson is behind, then the confidence threshold is lowered so that Watson can be aggressive and rally back. (This also means that Watson can look stupid sometimes — and this was a very serious consideration because, as Ferrucci remarked, “I was told many times that I was mucking with the IBM brand.”)

All the while the team had to balance game strategy — i.e., managing the luck of the draw (e.g., Daily Doubles and deciding how much to bet) with this deep analysis, speed (Watson’s computation was optimized on over 2800 POWER7 processing cores, going from two hours per question on a single CPU to an average of just three seconds), and results.

Ferrucci and his team triumphed amazingly on TV of course. But according to Ferrucci, Watson’s Jeopardy! victory was validated by the numbers, too. For example, a typical winning human performance involves acquiring (i.e., being confident enough and fast enough to buzz in quicker than the competitors) 47 percent of the clues and getting 90 percent of those answers correct. (As a point of reference, Ken Jennings acquired 62 percent of the questions during his winning streak, and exhibited 92 percent precision on those questions. “He [essentially] assumed he knew all things,” Ferrucci said to much laughter, “and unfortunately for his competitors, he did know all things.”)

The precision of the IBM question-answer system with which Ferrucci and his team started (the “2007 IBM QA System,” he called it) plateaued at 14 percent. “This was the challenge that [the team] took on in early 2007” when it first started working on Watson, he said. By late 2010, Watson was consistently playing in the “winners’ cloud.”

By another metric, Ferrucci noted that he and his team went back and measured several of the key technical capabilities of Watson — question (and content) analysis, relation extraction, linguistic frame interpretation, etc. — and found that they exceeded the existing performance in the literature based on standard metrics. “This was very gratifying [for us],” he said, because it demonstrated that Watson was indeed a useful challenge for the field; the IBM team pushed the envelope in a number of key areas in computer science.

Ferrucci and his team accomplished its feat by pursuing:

- Goal-oriented system-level metrics and longer-term incentives. For example, they conducted a “headroom analysis” to see what value would be added if the project was successful — before the project was deemed a “go.”

- Extreme collaboration.

- Diverse skills all in one room.

- Disciplined engineering and evaluation — including biweekly end-to-end integration runs and evaluations. By the end, each one of these runs was producing over 10 gigabytes of error analysis spanning 8,000 test cases, requiring the team to build tools just to understand what the system was doing.

But along the way, they faced huge growing pains, too, resulting in considerable algorithm refinement. Ferrucci illustrated a few of the most vivid challenges by displaying relatively easy questions that Watson failed to answer correctly during development:

- “Decades before Lincoln, Daniel Webster spoke of government ‘made for’, ‘made by’, and ‘answerable to’ them.” The correct answer is people. Watson said no one.

- “An exclamation point was warranted for the ‘End Of’ This! in 1918.” The correct answer is World War I. Watson said a sentence.

Ferrucci concluded by describing his view of the potential business applications of Watson, spanning healthcare and the life sciences, tech support, enterprise knowledge management and business intelligence, and government (to include improved information sharing and security). Key to all of this, he noted, are evidence profiles from disparate data — a feature used in the development of Watson. Each dimension contributes to supporting or refuting hypotheses based on strength of evidence, important of the dimension, etc. And as more information is collected, evidence profiles may evolve.

Finally, a word about Toronto…

Despite Watson’s brilliant success, lots of people were stunned by its most embarrassing moment, toward the end of one of the Jeopardy! episodes. In response to the FinalJeopardy! clue, “Its largest airport is named for a World War II hero, its second largest for a World War II battle,” Watson said, “What is Toronto?” The answer wouldn’t necessarily have raised an eyebrow, except for the fact that the question was within the category “U.S. Cities.” So what went wrong?

Ferrucci described how Watson learned over time that category was not a driver. In fact, there was no hard filter on the category — or on anything else for that matter. Watson engineers had experimented with filters early on, only to find that they resulted in bad results. For example, after seeing the supercomputer repeatedly fail to specify a month when the category was “Months of the Year,” Ferrucci finally instructed his team to put in a filter mandating that the answer upon “month(s)” must be one of the 12 months. And then Watson failed to yield “Ramadan” when posed with the clue, “Muslims may hope this ninth month of their calendar goes by fast.”

But beyond hard filters, Watson’s overall confidence was also less than the threshold for this particular question — its top three answers were Toronto (14 percent), Chicago (11 percent), and Omaha (10 percent) — but it had to answer because this was the Final Jeopardy! question.

(Contributed by Erwin Gianchandani, CCC Director)

Trackbacks /

Pingbacks