A recent article in Nature, highlights a major challenge that computer engineers are facing. As the size of microcircuits decreases, their temperature rises. They must now find new and innovative ways to keep cutting edge computer parts cool.

Current trends suggest that the next milestone in computing — an exaflop machine performing at 1018 flops — would consume hundreds of megawatts of power (equivalent to the output of a small nuclear plant) and turn virtually all of that energy into heat.

Increasingly, heat looms as the single largest obstacle to computing’s continued advancement. The problem is fundamental: the smaller and more densely packed circuits become, the hotter they get. “The heat flux generated by today’s microprocessors is loosely comparable to that on the Sun’s surface,” says Suresh Garimella, a specialist in computer-energy management at Purdue University in West Lafayette, Indiana. “But unlike the Sun, the devices must be cooled to temperatures lower than 100 °C” to function properly, he says.

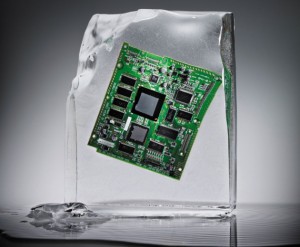

To achieve that ever more difficult goal, engineers are exploring new ways of cooling — by pumping liquid coolants directly on to chips, for example, rather than circulating air around them. In a more radical vein, researchers are also seeking to reduce heat flux by exploring ways to package the circuitry. Instead of being confined to two-dimensional (2D) slabs, for example, circuits might be arrayed in 3D grids and networks inspired by the architecture of the brain, which manages to carry out massive computations without any special cooling gear. Perhaps future supercomputers will not even be powered by electrical currents borne along metal wires, but driven electrochemically by ions in the coolant flow.

This is not the most glamorous work in computing — certainly not compared to much-publicized efforts to make electronic devices ever smaller and faster. But those high-profile innovations will count for little unless engineers crack the problem of heat.