The following is a special contribution to this blog by by CCC Executive Council Member Mark Hill and workshop organizers Hanspeter Pfister, An Wang Professor of Computer Science at the Harvard School of Engineering and Applied Sciences, and Georg Seelig, Assistant Professor of Computer Science & Engineering and Electrical Engineering at the University of Washington.

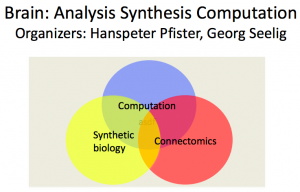

Hanspeter Pfister and Georg Seelig organized a two-day DARPA ISAT workshop on Brain Analysis, Synthesis, and Computation (BASC) in August, 2013. The workshop focused on the state of the art in the areas of brain analysis (structural and functional), brain synthesis, and how they affect the future of computation. The workshop was well attended by over 60 experts in the areas of neuroscience, computer science, engineering, biology, and industry representatives.

Hanspeter Pfister and Georg Seelig organized a two-day DARPA ISAT workshop on Brain Analysis, Synthesis, and Computation (BASC) in August, 2013. The workshop focused on the state of the art in the areas of brain analysis (structural and functional), brain synthesis, and how they affect the future of computation. The workshop was well attended by over 60 experts in the areas of neuroscience, computer science, engineering, biology, and industry representatives.

The highlight of the first day were keynotes by Jeff Lichtman (Harvard) on connectomics (Analysis), Robert Kirkton (Duke) on genetic engineering of excitable cells (Synthesis), Adrienne Fairhall (UW) on simulations in neuroscience (Computation), and an evening keynote by Jack Gallant (UC Berkeley) on mapping cognitive functions of the brain. These keynotes gave a great overview of the state of the art in the various areas and set the tone for subsequent breakout discussions by the attendees. Among the many questions explored in the workshop were:

Analysis: How is the brain wired and how does that wiring enable computation? To understand how a brain computes, is it necessary to identify the “wiring diagram” or connectome at the neural level? Is it possible to identify recurring circuit motifs that can be associated with specific computational and regulatory roles? How can we measure network dynamics and how does the interplay of structure and dynamics give rise to brain function and, eventually, behavior?

Synthesis: Can we build a functional brain? Tissue engineering and stem cell biology have developed methods for converting stem cells into neurons or culturing populations of neurons. However we are still far from systematically “wiring up” and “programming” such collections of cultured neurons to implement a specific computation? Can we develop a synthetic biology of the brain?

Computation: How does the brain process information? How does the state of the art in analysis and synthesis influence how we understand computation in the brain? How can we use biological “design principles” as guidelines for engineering electronic devices (neuromorphic engineering)? Conversely, can we use more abstract models of computation (Hopfield networks, etc.) as guidelines for engineering synthetic “brains?” If we understood how the brain computes how would that influence computer architecture, machine learning and other engineering disciplines?

The publicly released slides are here.