Yoshua Bengio during the Turing Lecture at HLF

Yesterday morning at the Heidelberg Laureate Forum (HLF) laureates Yoshua Bengio (2018 Turing Award), Edvard Moser (2014 Nobel Prize in Physiology or Medicine), and Leslie G. Valiant (1986 Nevanlinna Prize and 2010 Turing Award) each presented a lecture related to artificial intelligence or the modeling of the brain.

Yoshua Bengio’s lecture on “Deep Learning for AI” provided a retrospective of some of the key principles behind the recent successes of deep learning. Dr. Bengio’s work has mostly been in neural networks, which are inspired by the computation found in the human brain. One of the key insights in the field came with the representation of words as vectors of numbers. This allowed relationships between words to be learned (e.g. cats and dogs are both household pets) and these vectors did not need to be handcrafted.

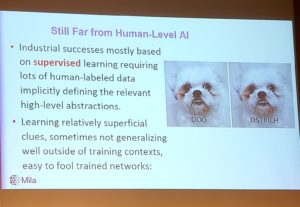

AI fooled into believing a dog is an ostrich

Unfortunately we are still far away from human-level AI. Many of the recent industrial success have mostly been based on supervised learning that requires lots of human-labeled data that implicitly defines the relevant high-level abstraction, but it is still easy to fool many of these trained systems with changes that would obviously not trick a real human being (see the slide to the right). Bengio suggested that advancements could be made with the further use of attention mechanisms. A standard neural network processes all of the data in the same way, but we know that our brains focus computation in specific ways based upon the previous context (i.e. it pays attention to what’s important). Applying attention mechanisms allows for more responsive and dynamic computation and might enable machines to do things that look more like reasoning.

Bengio also stressed the importance of focusing AI for social good. While AI can of course be beneficial or benign, it can also be abused and used to disenfranchised vulnerable groups. As an example, given during his later press conference, he warned that deep learning for facial recognition could be used in weapons that kill certain people or populations without a human in the loop. Bengio has been part of efforts to persuade governments and the UN to sign treaties preventing the use of such potential systems but so far there has been little traction.

When asked about the risks of AI displacing workers during the press conference, Bengio said that he believes the risk is there: it is likely there could be relatively rapid changes in the job market compared to the past and even if new jobs are created the old jobs will be destroyed too quickly for people, especially older populations, to retrain in time. He argued that a universal basic income (UBI) could potentially be one way to alleviate these impacts. However, even if UBI can solve these potential economic problems the wealth generated from high tech risks being concentrated in few wealthy countries, which could be dangerous because the negative costs of said technologies (in terms of job loss) could still occur in countries that cannot afford to bear those costs.

Still Bengio stressed that computer scientists should “favor ML applications which help the poorest countries, may help with fighting climate change, and improve healthcare and education.” He also highlighted the work of AI Commons, a non-profit that he co-founded that seeks to extend the benefits of AI to everyone around the world.

Edvard Moser during the Lindau-Lecture at HLF

Edvard Moser’s lecture, “Space and time: Internal dynamics of the brain’s entorhinal cotex” focused on a study of mice that sought to understand the way in which mammals process spatial information in the entorhinal cortex and hippocampus. In mammals, specific place cells and grid cells (types of hippocampal neurons) fire when the animal is in a certain location. This firing enables synaptic plasticity thus encoding the position within the environment into the animal’s memory.

Moser noted that grid cells (and place cells) have been reported in bats, monkeys, and humans suggesting they originated early in mammalian evolution. Further study in this area could reveal much about the way that human memory works, perhaps in ways that could benefit future AI systems.

Finally, Leslie G. Valiant discussed “What are the Computational Challenges for Cortex?” Valiant said that there are constraints on the brain that are not present in computing systems, such as sparsely connected neurons, resource constraints, and the lack of an addressing mechanism (for memory recall) Understanding these constraints are required for continuing to advance neuro-inspired computing techniques.

Leslie G. Valiant speaks at HLF

As examples Valiant described two types of human-based “Random Access Tasks”:

- Type 1 is allocating a new concept to storage (e.g. the first time you heard of Boris Johnson). “For any stored items A (e.g. Boris), B (e.g. Johnson), allocate neurons to new item C (Boris Johnson) and change synaptic weights so that in future A and B active will cause C to be active also.”

- Type 2 is for associating a stored concept with another previously stored concept. For example you want to associate “Boris Johnson” with “Prime Minister”. For an stored item A (Boris Johnson), B (Prime Minister) change synaptic weights so that in the future when A is active B is also active

Some aspects of the mechanisms of knowledge storage are now experimentally testable, and Valiant expects more algorithmic theories will become testable going forward.

Trackbacks /

Pingbacks