Somali Chaterji

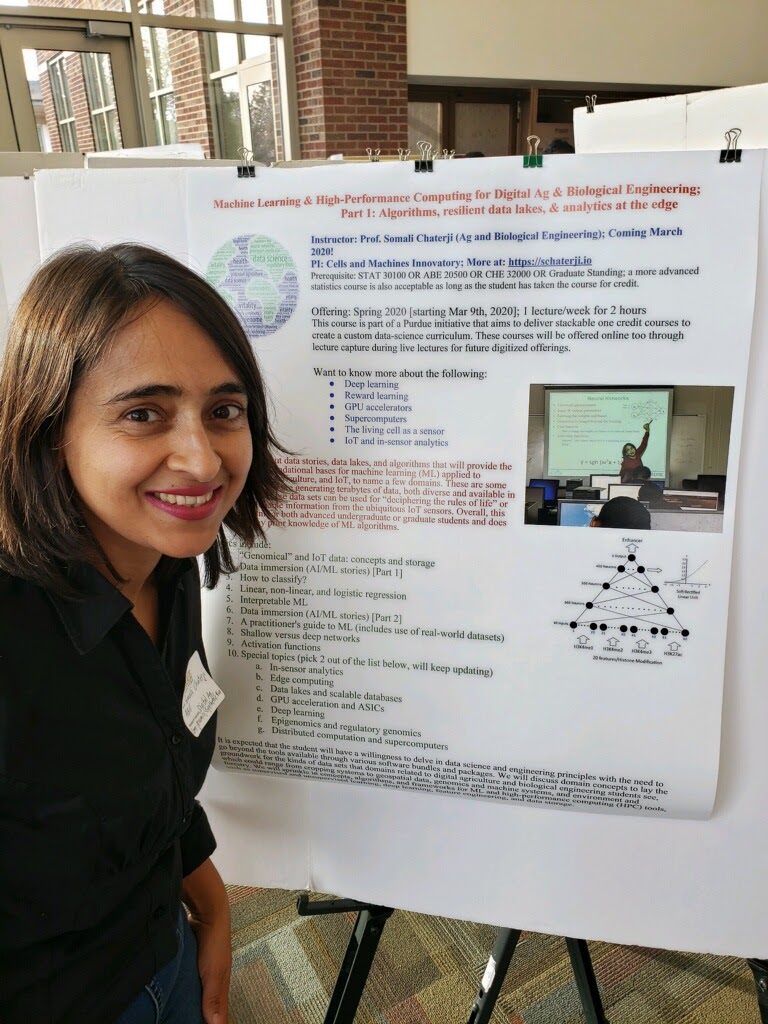

The following Great Innovative Idea is from Somali Chaterji, Assistant Professor in the Department of Agricultural and Biological Engineering at Purdue University where she leads the Innovatory for Cells and Neural Machines.

The Idea

The idea behind my work is that there is strength in numbers — a distributed computing system that needs to run a computationally heavy application on scarce resources can do so by pooling together many weak to moderate devices in a federated setting and with security guarantees. The secret sauce in my work is to do the right level of approximation at the right point in space (which device) and at the right point in time (which time windows in a data stream) and using Byzantine-resilient secure aggregation across these devices.

I lead a cyber-physical lab, Innovatory for Cells and (Neural) Machines (ICAN), where I have three distinct testbeds to validate my engineered algorithms, primarily leveraging the terabytes of data at the nexus of computer vision and IoT. Among my most recent algorithms are: ApproxDet for approximate video object detection [ACM SenSys, 2020], Janus for benchmarking cloud-based and edge-based technologies on IoT and computer vision data [IEEE-Cloud 2020], and OptimusCloud for joint optimization of cloud virtual machines (VMs) and database parameters in cloud-hosted databases [USENIX ATC 2020].

I have three distinct cyber-physical testbeds, specifically with characteristics that stress test the algorithms using features of real-world data. First, a living testbed, consisting of IoT sensor nodes deployed in fields, alongside gateway nodes. These collect real-time data from heterogeneous sensor streams from the fields, ranging from soil moisture and temperature sensors to nitrate and other soil nutrient sensors. Second, an embedded testbed consisting of mobile GPUs with different computational resources (CPU and GPU specs) and different communication modalities (short range Bluetooth to long range LoRa). And the third dimension comes from mobile aerial drones that can validate the approximate object detection algorithms that are both content-aware (in terms of the dynamism of the video feeds) and contention-aware (in terms of the co-located applications running on these systems-on-chips (SoCs) with lesser resource isolation capabilities than server-class machines). The drones bring in the dimension of actuating on the decisions, such as, spraying soil nutrients determined based on the sensing and the control algorithms.

On the algorithmic side, we are interested in developing approximate algorithms for a rather beefy class of applications, specifically computer vision algorithms. These include human activity recognition algorithms based on video and speedier training of such algorithms using federated learning. We then leverage the three classes of testbeds for testing these optimized computer vision algorithms in the wild (living testbed), imparting heterogeneity (mobile GPUs), such as in our recent benchmarking paper for video object detection algorithms, and also dynamism/mobility (aerial UAVs). Further, our objective here is to tune the degree of approximation of these computer vision algorithms at runtime, based on both the video content and the contention (through co-located applications) on these devices. This runtime approximation is done with three dimensional requirements in mind — latency, energy consumption, and reliability.

Impact

The potential impact of the idea is that we will be able to leverage the rapidly proliferating networks of sensor and edge devices to do useful computation. Currently they are mainly used as “eyes to the world”, i.e., to collect the data, and then to ship all the data to data centers. This puts pressure on the network and on the compute capacity at the data centers. My idea will relieve these pressures by having the sensor and the edge devices also do processing, close to the source of the data, and with rapid turnaround times. Thus, it will open the vista to many new applications, those that depend on automating processes based on sensor data.

I am excited to have a lab that translates lab-scale algorithms to practice through the intermediate step of prototyping on our testbeds. For example, for computer vision algorithms, we can do aggressive optimization of the size of the neural networks or adaptive (early) exits from the neural network’s layers to guarantee a 33 millisecond latency budget, which is considered desirable for augmented/virtual reality (AR/VR) applications for surgical simulations or for improving road safety in autonomous driving, e.g., by converting vehicle-to-infrastructure signals to vehicle-to-vehicle signals. Thus, our goal is to enable adaptive algorithms to automatically interface with the hardware, such as with the car’s embedded supercomputing platform (e.g., NVIDIA Jetson embedded technology), and with the context. This context could be that of the video (e.g., dynamic versus more static video frames or the number of objects in every frame) or the context of the disease (where we develop local models for the different conditions, e.g., in Theia for RNA therapeutics). Some of these algorithms will be whitebox (i.e., we will need access to the internals) so that we have the ability to federate or distribute the algorithms across devices or servers, and adaptively partition algorithms across the device (on-device computation), edge, and cloud. This partitioning is done as a function of the latency and accuracy requirements of the application.

Other Research

I lead ICAN at Purdue University. ICAN is an applied data science and engineering lab working on two thrusts: computational genomics and Internet of Things (IoT). These two thrusts are connected both by the machine learning tools and data engineering platforms that we develop to enable precision health and IoT algorithms and by my vision of mapping the energy efficiency of living cells to the artificial sensor nodes that are deployed ubiquitously in farms and factories. My lab is driven to learn and interchange tools between these two thrusts. The overall goal is to enable IoT sensors to perceive and actuate on the one hand (ICAN’s Thrust #1), or to create new algorithms to understand the genome of living cells, on the other (Thrust #2). . Further, the two application domains enable me to optimize for different domain-specific requirements. For example, for genomics, the performance metric is often throughput, whereas for IoT, the performance metric tends to be more latency-centric, with a focus on faster reaction times.

Researcher’s Background

My background is in biomedical engineering and in computer science. My first federal grant was a National Institutes of Health (NIH) R01 grant on the data engineering side of genomics, specifically to engineer noSQL databases that can deal with huge influxes of metagenomics data and still maintain high performance (measure in terms of operations/sec or throughput). This was as a postdoc in Computer Science where my projects were largely to do with improving the backend databases for large-scale genomics, and more specifically, metagenomics databases. This then resulted in creating databases that could also respond to latency Service Level Objectives (SLOs), such that they could deal with latency-sensitive workloads such as in applications in self-driving automobiles or in AR/VR for surgical simulations. That was my entrance to the IoT side of things where the databases often have to deal with a rapid influx of heterogeneous data streams and have to be more latency-aware, given the near-real time decision making needs in IoT and automation.

My website: https://schaterji.io

My Twitter handle: @somalichaterji