Mental well-being is essential to a happy life, and especially with the stresses of the pandemic heightening the mental health crisis plaguing the US and the world, research on ways technology can improve mental health has never been more crucial. Technology can be detrimental to users’ mental health, but there are also abundant ways that it can be used to improve mental health. CCC organized a panel, “Improving Mental Health and Supporting Self-regulation with Technology,” that outlined the status of many creative technologies that support people’s mental well-being. The panelists were Dr. Tanzeem Choudhury (Cornell University), Dr. Mary Czerwinski (Microsoft Research), and Dr. Shri Narayanan (University of Southern California). The session was moderated by Dr. Holly Yanco (University of Massachusetts Lowell).

of the pandemic heightening the mental health crisis plaguing the US and the world, research on ways technology can improve mental health has never been more crucial. Technology can be detrimental to users’ mental health, but there are also abundant ways that it can be used to improve mental health. CCC organized a panel, “Improving Mental Health and Supporting Self-regulation with Technology,” that outlined the status of many creative technologies that support people’s mental well-being. The panelists were Dr. Tanzeem Choudhury (Cornell University), Dr. Mary Czerwinski (Microsoft Research), and Dr. Shri Narayanan (University of Southern California). The session was moderated by Dr. Holly Yanco (University of Massachusetts Lowell).

Dr. Narayanan started the panel by discussing the multimodal machine intelligence technologies for supporting mental health and wellbeing. Technology is advancing in many new, exciting ways, allowing it to observe, analyze, understand and interact with humans. It is important that research in this area is human centered, meaning data/information is characterized about, from, and for people. It must be informed by how people perceive, process and use human data.

When designing technology to help support someone in their moment of anxiety or crisis, researchers need to take into account the bedrock principle that the human condition is not static, but changes all the time. Another important aspect of the human condition is that different health conditions are prevalent at different times across someone’s lifespan.

Dr. Narayanan continued by explaining, “historically, researchers have taken unimodal approaches to understanding, modeling, and recognizing more objective human processes. For example, in speech recognition research, the goal is to determine from an audio signal ‘what was spoken.’ The goal of speech diarization research is to determine ‘who spoke when’ during multi-party conversations.” This is a low-level descriptor of human behavior, and researchers must shift to modeling more abstract human behaviors using such multimodal signal processing building blocks.

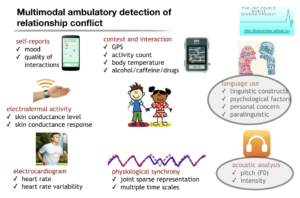

Researchers have used speech and natural language technologies to analyze typical talk therapy, which has been successful. This technique could be applied not only to established clinical settings, but also to daily life; for instance, anticipating when conflict will occur before it happens and using technology to intervene before the situation gets heated. There are many different modes of ambulatory detection that can collect data for this kind of intervention (Adela C. Timmons, Theodora Chaspari, Sohyun C. Han, Laura Perrone, Shrikanth S. Narayanan, and Gayla Margolin. Multimodal Detection of Conflict in Couples Using Wearable Technology. IEEE Computer. Special Issue on Quality-of-Life Technologies. March 2017.):

Predicting when moments of conflict will occur with high accuracy would have incredible benefits.

Another domain where technology, especially machine learning, could be very useful is with autism spectrum disorder diagnosis. The current practice involves observing individuals or through surveys, and computing researchers could help optimize this process. Computing researchers could also make a great impact in the realm of psychotherapy. Figuring out what techniques work when and how with certain patients isn’t super helpful on a large scale. Understanding what is happening across sessions, the outcomes, and the context, can be very helpful and are goals that machine learning can hopefully achieve.

When merging mental health support and technology, it is essential that the people impacted trust the technology. It is pivotal that the tech is inclusive, safe and has privacy features. Finally, Dr. Narayanan reiterated the importance of human-centered machine intelligence. The products of machine learning algorithms are only useful when we can implement them at scale economically, and when we can translate them into use for people.

Next, Dr. Choudhury continued the conversation by elaborating on sensory interventions that use this multi-model technology. She emphasized the importance of technology being (1) readily available to conduct an intervention in the moment; and (2) functional to work in parallel to a task you are already doing. Technologies should be designed to support and augment existing interventions, and there are many opportunities to use multimodal continuous features to self-regulate in the moment.

Dr. Choudhury then took a step back and emphasized the importance of self-regulation. We all hope to live healthy lives where we excel and take care of our physical and emotional wellbeing. Self-regulation can impact goals you want to achieve, and failures to self-regulate means you probably can’t achieve the goal that you want. For example, a college student who studies a lot and is well prepared for an exam has a panic attack during the exam, and therefore fails it. This can happen in the workplace, during a job interview, etc.

Technology for self-regulation aims to help people achieve their goals and realize their maximum potential. To achieve these aims, the technology needs to be unobtrusive and effortless to use and maintain.

To have an effective intervention, you first have to have an effective way to measure someone’s emotional signals. Some people are more aware than others of when they might be experiencing anxiety, depression, etc. One way to heighten someone’s awareness of these moments is having technology provide an emotion check.

One idea of this is a wearable device that produces subtle vibrations on the wrist (simulating heartbeats). The way to detect if an intervention is needed is if someone’s heart rate is rising and they aren’t exercising, which indicates they are likely experiencing stress or anxiety. Once the device recognizes the heart rate increase, it can intervene by emitting subtle vibrations at a lower frequency than the elevated heart rate with a goal to reduce the heart rate. This kind of technology has proven to be influential in other situations as well, like mitigating cravings of alcohol through vibrotactile heart-rate biofeedback.

Another type of technology that provides in-the-moment intervention is a wearable affective touch mechanism that provides a soothing touch when it detects an elevated heart rate. The final example Dr. Choudhury provided was a BreathPulse, which is a small machine that attaches to someone’s laptop and influences their airflow subtly (doesn’t require a break/time out to have impact).

Dr. Choudhury concludes that there are ample opportunities for unobtrusive self-regulation technologies that automatically change how users perceive cues from the environment or themselves, which can be incredibly influential in helping users achieve mental and physical well-being.

Dr. Czerwinski was the final panelist of the session, and she agreed with the idea that the technology used during self-regulation interventions needs to be subtle and timely. She and her colleagues specifically worked with people at risk of suicide. She explained that “mental health disorders are the leading cause of disability and death worldwide, with over 18% of US adults experiencing a mental illness per year.” Evidence-based psychotherapy is effective for many mental health conditions.

One type of evidence-based psychotherapy is Dialectical Behavioral Therapy or DBT, which is a skills-based therapy designed to support people with complex disorders in developing concrete coping skills. While DBT can be successful in helping people manage different disorders, it is difficult to quantify the effectiveness of the strategy.

Some ideas, such as mobile mental health interventions, have looked to reduce the financial and time barriers associated with in-person therapies, and increase diverse engagement. However, these interventions largely don’t follow evidence-based principles and are therefore widely perceived as ineffective.

Dr. Czerwinski continued by expanding on a promising combination of these two interventions: “The translation of evidence-based psychotherapy into mobile apps, and the collection of usage data within those apps, provides new opportunities to conduct analysis on the effectiveness of these interventions.” This data is informing the creation of designs that provide the best possible support.

Dr. Czerwinski and her colleagues developed an app called Pocket Skills, which is composed of several modules and skills that are designed to provide holistic support for DBT (Schroeder, J., Wilkes, C., Rowan, K., Toledo, A., Paradiso, A., Czerwinski, M., Mark, G. and Linehan, M.M. “Pocket Skills: A Conversational Mobile Web App to Support Dialectical Behavioral Therapy.” CHI 2018). Through the collection of data on the app, they can examine the effectiveness of individual DBT skills.

The skills section of the app helps people learn and practice the DBT skills. Dr. Czerwinski provided: “For example, someone feeling emotionally distressed may want to check the facts of the situation to verify that their emotion fits the actual situation.” Another important aspect of the app is that they included the presence of the creator of DBT, Marsha Linehan, who is very well-known in the community.

Dr. Czerwinski and her team used data from a month-long field study of Pocket Skills with 100 participants, and applied a combination of statistical and machine learning methods, and found that the app was broadly effective. They drew this conclusion from a collection of participant characteristics through surveys and app usage data including self-reported ratings of skill effectiveness. For example, Emotion Regulation skills asked people to rate their emotional intensity before and after completing the skill. For most users, it was crucial for the intervention to take place in the moment of distress.

After positing a variety of research questions, they drew the following conclusions: (1) “We found that most skills are used when a person is in distress and some were more helpful in reducing distress than others, influenced by individual differences, suggesting that, designers should incorporate a range of contextual information” and (2) “We also saw that skills that work could lead to overall mental health improvement, and such effective skills could be predicted using machine learning methods. Therefore, digital interventions could be infused with intelligent support and personalized skill recommendations.”

After Dr. Czerwinski concluded her talk, the floor was opened for the Q&A portion of the session. The first question was asked by Dr. Holly Yanco, CCC Council Member and the moderator of the panel: What barriers to these technologies being utilized do you see?

- Dr. Narayanan: Today what is the status quo between those in need and the providers? There is a need to build and improve trust. Access to quality mental health care and at an affordable cost are huge barriers worldwide. We need to see what role technology can play to mitigate these barriers. There also needs to be an increase in awareness of the importance of mental health. Mental health conditions are oftentimes hidden, and there is a large stigma against mental health. How can technology improve awareness?

- Dr. Choudhury: I agree that technology, clinical care and mental health are part of a complex system. How does this technology fit in with delivery of care? The healthcare market is difficult to break into. Therefore, making small changes to the healthcare system is best. The quality of care patients are receiving is not uniform. How do tools, sensing, and AI improve the quality of care without compromising the carefully crafted structure by which these therapy tools are delivered? How can it help them take action, and what is the right thing to service? Economics of delivery of healthcare is also important. If we can hit economic pinpoints we can see more wide scale adoption. These three facets, foundational research, care delivery, and economics are important.

- Dr. Czerwinski: An even simpler thing that gets in the way is ease of use. We’ve designed multiple apps and ease of use is a huge barrier. Need super easy use, like a big red button if you are in very bad suicidal ideation.

- Dr. Narayanan: We also need to make sure the researchers of these technologies are not just patient centric, but also are considering the needs of the providers, family members, etc.

CCC Council Member Dr. Katie Siek asked the next question: When you are doing just in time interventions, older people have different skin types; pregnant people fluctuating weights, etc. how are you getting them just in time and have the proper sensors?

- Dr. Choudhury: One of the things we’ve seen repeatedly is different people manifest changes in different ways. It is really important if there is a drastic change from normal behavior. You must watch the trend. Also, how do you combine the data we get from everyday devices with clinical data? None of this data on its own will give you the full story. Combining the two will reveal a lot.

Dr. Robin Brewer, a panelist for the CCC-sponsored session, “Surveillance, Assistance, or Hindrance?: Technology for Older Adults Caregiving,” asked a question next: “For just in time interventions for mental health, how do you design them in a way that isn’t “creepy” so people will use and accept them?”:

- Dr. Choudhury: Design and ease of use is important. For the heart rate one there is a special purpose to embed in watches that is part of what they are wearing and doesn’t stand out. For the breathing mechanism one, it is embedded as part of the camera to regulate personal airflow. For the stroking one it is usually under your clothes or where you can not see it. We are not there yet with a lot of these technologies, but ease is so important.

- Dr. Narayanan: Occupational Therapists work with children with autism, for example. For them, haptics and touch is important–it is not always perceivable in ways that people not on the spectrum would feel. Knowing and personalizing signals for people with appropriate design is crucial. Thought and time to individualize the technological intervention is key.

Next, an audience member asked: “How/if you see how AI can be incorporated into these technologies – OR advancements that you see in AI being applied to these technologies?”

- Dr. Czerwinski: We can’t do anything in HCI [Human-Computer Interaction] without AI. Yes, AI has to be incorporated. We have been using great algorithms. We have a lot of work to do especially in personalizing–it is getting there but not done. There are a lot of people using chat GBT for therapy, which is concerning.

- Dr. Choudhury: We have such rich sets of recommendation systems that influence everyday life, but not for mental health. How do you know which signals are important? For example, if you know that a user’s mental health is impacted by disruption in sleep, you can personalize recommendations that target the right symptom. You won’t just set a sleep schedule, but will deliver interventions based off of the sleep you actually got. AI can tie that to personalization and recommendations. A lot of chatbots that are fully based on language models, and then there are sensor based and multimodal solutions. One aspect is how do you know when someone is exhibiting symptoms of distress and inputting them into the app, what are things a chatbot can easily address, and when is a human needed to alleviate the symptoms? How do you do these handoffs and triage so you don’t have as many risks of automated responses?

- Dr. Narayanan: Machine intelligence is an ecosystem. The ability to provide a measurable way to enhance human cognitive abilities, by filling gaps in treatment plans, would be invaluable. For example, if someone is reporting changes in their sleep patterns, it is important to know about any recent medicine changes, what happened in their recent therapy sessions, etc. Knowing this information would enhance a provider’s ability to make an informed decision on what the next steps should be for the patient. There is uncertainty and variability in the process, and you must sift through the information presented. Machine learning and other methods can put together data and stratify it. It can be done in a way that can be classified, and looking in a more dimensional way. We take in information, distill it, and what do we generate in response? Finally, technology interfaces can enhance [other forms of] interventions. It must be connecting back to people involved. Effective collaboration between the people involved and the technology can happen. We are not there yet, but working on it. Collaborative enterprise with all people involved. Technology is one enabler of this ecosystem.

Another audience member asked: “My question is not so much about technology, but about usage. How is it being used a lot in the country? And do you have thoughts for countries which don’t use cell phones as much?”

- Dr. Narayanan: Science. When you look at medicine, technology has made huge changes. Targeting drugs, etc. Psychiatry is far behind. We don’t have an understanding of mechanisms at scale. We can support various kinds of diagnostic and screening sites. Examples about the importance of intervention just in time were prevalent with this panel, and that is also true on the screening diagnostic front. On the treatment side, enabling access in ways and personalizing it is crucial. This is just recently being tried; there is no parallel to drug development, and we need a lot of studies. It is still an open question, and there is no set solution. Mental health clinics are slowly adopting tools. We are in a phase of growth; adopting globally is the goal, but we need to think it through. This is definitely a place with lots of research opportunities, especially in the mental health space.

- Dr. Choudhury: Access exists for some interventions. Teletherapy and other things are available through employers. We do need to improve the quality, but we’re seeing this through startups, and doing their own internal testing to see how this is improving. A lot of rich signals in delivering better care. Some of this has been used in physical settings, to detect physical activity. It isn’t a clear cut story. If you are suggesting treatment you need FDA approval, or if you are taking action based on someone being suicidal.

Next, someone in the room asked: “Information on the use of psychedelics; ie.ketamine, etc. seems like a great study to design using the substances and how technology is intertwined?”

- Dr. Choudhury: There are studies happening; this is one of the things that isn’t clear, like when someone needs to be redosed and what level of dosing is needed. Some clinical studies are being done to combine multimodal treatments and psychedelics to see when people need to be redosed.

- Dr. Czerwinski: We are starting to see some individuals go into worse mental health episodes with the use of them, especially when they are experimenting on their own. The interactions with other drugs is an important aspect too.

- Dr. Narayanan: We can get to the people instead of asking them to come to you. Some studies include interventions…

The last audience question was, “The self regulation technology you talked about, it is interesting from the perspective of neuroplasticity. In theory drugs promote neuroplasticity that people can do on their own, enhancing that ability. Feels like a natural extension of self-regulating technology.”

- Dr. Choudhury: That is a great question, how do you extend the effect of some of these interventions? I don’t know if there are studies into that though.

Dr. Yanco asked the final question of the session: “How do we design for trustworthiness and privacy and how do we control our data?”

- Dr. Czerwinski: Very carefully, at Microsoft we have to go through so many reviews to ensure everything is private.

- Dr. Choudhury: It varies from person to person and condition to condition. For schizophrenia, we know it is lifelong, and really impacts quality of life. If you tie it to care delivery there is much more adoption. Also, how valuable it is changes depending on the condition of the person suffering.

- Dr. Narayanan: Whose point of view is important–the individual, provider, their family, the system, etc. Bringing technology to mediate means giving it access to sensitive info which may include other things about their situation. From an AI perspective, there are a number of efforts to see how we can have privacy-preserving protections. There are many supporting policy and legal things. It is all about the combination of the benefits gained by bringing this into care system technology and the use of the technology, how do we create that? And at what cost? It is an ongoing choice. Delivering technology that is inclusive, safe, secure and equitable is a positive thing.

Thanks to the incredible panelists for participating in this session! Stay tuned next week Thursday for our final AAAS panel recap: Maintaining a Rich Breadth for Artificial Intelligence.