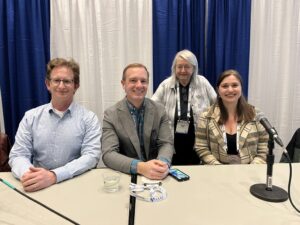

CCC supported three scientific sessions at this year’s AAAS Annual Conference, and in case you weren’t able to attend in person, we will be recapping each session. Today, we will summarize the highlights of the panelists presentations of the session, “Large Language Models: Helpful Assistants, Romantic Partners or Con Artists?” This panel, moderated by Dr. Maria Gini, CCC Council Member and Computer Science & Engineering professor at the University of Minnesota, featured Dr. Ece Kamar, Managing Director of AI Frontiers at Microsoft Research, Dr. Hal Daumé III, Computer Science professor at University of Maryland, and Dr. Jonathan May, Computer Science professor at University of Southern California Information Sciences Institute.

Large Language Models are at the forefront of conversations in society today, and the jury is out on if they are living up to the hype surrounding them. The panelists of this AAAS session addressed the possibilities, challenges, and potential of LLMs.

The first panelist was Dr. Ece Kamar (Microsoft Research). She described the current status of AI as a “Phase Transition.” She provided a unique perspective as someone who has seen the changes in AI in industry, and the exponential growth in deep learning models that very few people anticipated would continue into 2024.

The growth was caused by an increase in the amount of data that LLMs are trained on, and the larger architecture called transformers. An interesting insight Dr. Kamar shared on the graph is that the models are scaling so rapidly because they were initially just trained for a particular task; a task they could reliably perform. ChatGPT showed that if you scale large enough, including the number of parameters a model takes into account, models could start completing tasks at a similar performance of a model that was trained to specifically complete the same tasks.

This is the definition of the LLM phase transition: models no longer need to be specifically trained for a specific task, but can be generally trained and then perform many tasks. And there are no signs that the growth of these capabilities are slowing down.

Dr. Kamar had early access to GPT-4, and during her extensive time testing it out, she was impressed by its significant improvements that came with scale and data, and the fact that it could synchronously accomplish different tasks.

What does the future hold for these LLMs? Dr. Kamar anticipates that LLMs will go beyond human language, and learn machine language and be able to translate between the two languages. This would enhance modalities capabilities in input and output, which could lead to models being able to not just generate language, but actions and predictions in behaviors.

Next, Dr. Kamar expanded on the significant phase transition occurring in computing. Systems are being developed very differently today, and this development will require creating a new computing paradigm which we have only scratched the surface of at this time. The way we interact with computers is going to look a lot different in the coming years, and this will require re-thinking of Human-Computer Interaction (HCI).

Another change is the way that humans will work moving forward. Microsoft has conducted studies that workers’ productivity can double in terms of lines of code written when assisted by AI. This is an incredible feat, but the way this technology works and where its intelligence comes from is largely unknown, so there are a lot of research questions in this area.

There are also a lot of questions about potential misuse of LLMs like these. There are concerns around fairness, different demographic risks, and other even more drastic consequences. While there is great potential for scientific discovery, there is also a great potential for harm; for instance convincing parents not to vaccinate their kids, a child to do something bad, or convincing someone the world is flat. A lot of safety efforts have gone into development of LLMs, and open sourcing can be very helpful to make progress in this area as well.

Dr. Kamar then posed questions to the scientific community:

- How will science change with AI disruption?

- Are we taking steps to transform how we educate and train the next generation?

- Are you building technological infrastructure to benefit from this phase transition?

- Are we preparing future generations for the new world?

Finally, Dr. Kamar emphasized that one of the core aspects of the phase transition that is remarkable is the speed in which LLMs are developing. These models are improving significantly in a very short period of time, and computing researchers have a lot of catching up to do.

The second panelist, Dr. Hal Daumé III (University of Maryland), started his talk by explaining that AI models should be developed to help people do the things they want to do; augment human work, not automate. This vision of automation has pervaded society since the 60s. Rather than helping people play chess better, scientists designed a system that plays chess on its own.

This philosophy isn’t going anywhere; AI today is still newsworthy once it is intelligent enough to do a task on its own. This is deep in the blood of AI. Before spending time and money on automating a system, we should first pause and ask is this in our interest?

Dr. Daumé pushed the concept of augmentation: how can AI be used as a tool? Systems like Github copilot increase productivity, but increasing productivity is not enough. A user of the system exclaimed that it let them focus on parts of coding that were fun, which is much more in line with how AI should be built.

AI researchers shouldn’t want to remove the parts of a person’s job that are fun; they should prioritize removing the drudgery. It should improve human lives rather than just improve the bottom line for a company.

Dr. Daumé co-authored a paper raising these points, and the counterargument emerged that from the technical perspective, building systems using machine learning technology in particular is often a lot easier to automate than to augment. This is because the data necessary to train a system that will train a system are easy to come by. We supply this information by doing our jobs, and it is easy to train ML to emulate human behavior. It is much harder to teach a system to help someone complete a task. This information is scattered amongst literature reviews from NSF, writing on a piece of paper by a programmer, etc. The data necessary to help a human do tasks isn’t recorded.

Another key aspect of building helpful systems is asking the user what systems would be helpful for their life. For instance, the needs of blind people are very different from the needs of sighted people (which are also different from what sighted people think the needs of blind people are). An example Dr. Daumé shared was that a visual system might reveal that an object is a can of soda, but a blind person can typically tell that on their own. The ingredients of the soda would be much more useful to them. There is an enormous gap between the quality of a systems’ responses to simply understanding questions to addressing accessibility questions, and this gap is widening.

An additional example of the importance of first determining community needs prior to creating technology to “help” them is content moderation. Many volunteer content moderators engage in the work because they want to make the world a better place, and help build a community they think is important. When asked what kind of tool they want to assist their role, they often don’t want their job to be fully automated, they just want boring parts like looking up chat history to be easier.

Dr. Daumé wraps up this discussion with a final example of his car-loving mom who loves cars, and refuses to drive automatic cars. She chooses manual transmission, and it is really important for her to have that choice. People should have control of if they want their tasks to be automated or not.

Dr. Daumé continues the conversation by offering alternatives to current approaches to accessibility technology. For example, when building a tool around sign language recognition, instead of scraping the internet for videos of people signing (which has a lot of consent and privacy concerns, plus most of these videos are of professionals and without background noise/distractions which isn’t realistic), reach out to the community and initiate a project that empowers them to submit videos to train the tools. Community-first strategies like these are more ethical and responsible, and give users more control.

LLMs and other tools should be developed to prioritize usefulness, not intelligence, Dr. Daumé concludes. The more useful it is, the more it can help people do something they can’t or don’t want to do, rather than automate something that people already do well and enjoy.

Dr. Jonathan May (University of Southern California Information Sciences Institute) was the next speaker, and he began his talk by reflecting on the theme of the conference: “Towards Science Without Walls.” He posits that while recent LLM development takes walls down for some people, it is building walls for many.

He first discusses how the internet lowered many barriers for conducting research; when he was 17 he wondered why Star Wars and Lord of the Rings had very similar plots, and he had to drive to the library and find a book with the answer. He did higher-stakes but equally arduous research for his PhD thesis, but by the end of his time studying there was a Wikipedia page created on the topic, and then internet search, and now car-less research is the norm.

Dr. May continued by saying that he felt privileged to be in the demographic for the target audience of LLMs. He doesn’t code often and never learned a lot of coding skills, but when he does need it for his work he can ask ChatGPT and it does a great job.

However, there are a lot of walls to making LLMs usefulness widespread:

- Language Walls: Models work better the more data they are trained on. While today’s commercial LLMs are multilingual, they are heavily weighted towards English. For instance, ChatGPT is trained on 92% English language. Further, the instruction data, which is the “secret sauce” of LLMs, is vast majority English (96% of ChatGPT’s for example). There are currently very few efforts at improving cross-lingual performance of these models despite systemic performance gaps on existing tests, which makes sense due to a general consensus that machine translation (MT) is “solved” and efforts should be focused on other tasks.

- Identity Walls: If you ask ChatGPT what you should do on Christmas, it focuses on different activities and traditions you can engage in; it doesn’t mention that you could go to work. LLMs have been shown to behave differently when describing different demographic groups, expressing more negative sentiment and even outright toxicity in some cases. There are probabilities of stereotypical sentences that can cause harm in communities like LGBTQ+ or Jewish; across the board there is a lot of bias and this has consequences in deployed decision-making. There are some safeguards built in, and more explicit probing questions are less likely to receive toxic answers, but models probabilistically prefer stereotypical statements and outcomes, and that is where there are harms especially when using models in downstream capabilities where you don’t see the output (i.e. loan eligibility). He gave an example of LLMs showing bias when generating faces of individuals based on their job; the lower paying jobs are shown as women and minorities, whereas the higher paying jobs are white males.

- Environmental Walls (software): LLMs require a significant amount of energy to produce and run. Even the most “modest” LMs use 3x more annual energy than the use of a single person. There is also a significant gap in data for the largest language models like ChatGPT, but the companies that own them explicitly deny access to their energy consumption.

- Environmental Walls (hardware): In order to produce chips, which all LLMs require, you need “conflict materials” like tantalum (mined in Congo), and hafnium (mined in Senegal and Russia). In the US, companies are supposed to report the amount of conflict minerals they use, but the US is publicly showing a decrease in use of these materials, which cannot be true. Beyond that, there are a lot of socio-political problems like China restricting germanium and gallium in retaliation to US export restrictions.

Dr. May expresses that these categories reveal some of the many downstream issues for harm caused by LLMs, and instances where people are not benefiting. There is cause for concern, but there are also opportunities for research and/or behavior changes that would mitigate some of these harms:

- Language: Devote more research funding to multilinguality (not just hegemonic translation to and from English).

- Identity: Bottom-up and community-inclusive research. Model modification and testing before deployment

- Environment: Algorithm development that uses less data and alters fewer parameters (e.g. LoRA, adapters, non-RL PO). Be conscientious about compute and insist on openness at regulatory levels

Dr. May wrapped up the panel by reiterating Dr. Daumé’s point that people should be benefited in the way they want to be benefited when interacting with LLMs, and this needs to be top of mind at the development stage.

Thank you so much for reading, and please tune in tomorrow to read the recap of the Q&A portion of the session.