CCC supported three scientific sessions at this year’s AAAS Annual Conference, and in case you weren’t able to attend in person, we are recapping each session. This week, we are summarizing the highlights of the session, “How Big Trends in Computing are Shaping Science.” In Part 4, we hear from Dr. Mehmet Belviranli, an Assistant Professor of computer science at Colorado School of Mines, in his presentation, titled, “Taming Diversely Heterogeneous Compute Systems.”

Dr. Mehmet Belviranli rounded out the panel by discussing heterogeneous compute systems, and their role in accelerating computing. “Heterogeneous computing”, said Belviranli, “is any kind of computing, in addition to CPUs, that relies on other architectures as well, such as GPUs and TPUs. These other architectures are often called ‘domain specific accelerators’.”

Almost every computing device can be categorized in the scale shown in the figure below, Dr. Belviranli explained. On the left hand side of the scale are general purpose processors and on the right hand side are hardwired logic, and everything in between can be categorized as domain specific accelerators. General purpose processors (CPUs) are much more flexible and can handle a wider range of tasks. However, as we move right on the scale, the efficiency, or number of tasks the processor is able to perform given a set amount of time, increases. The graph, shown on the right hand side of the figure, displays the efficiency of different processors for a given task in images per Watt. In both tasks, the ASIC performs most efficiently. “You may wonder, if the ASIC performs most efficiently in both these tasks, why don’t we use ASICs for everything?” said Dr. Belviranli. “It is because ASIC processors are extremely application specific, and in most cases are single function, so it would not be helpful for any other computing you do everyday, except the one it is designed for. ”

“We see accelerators everywhere nowadays. Google uses TPUs in their Google Cloud, Amazon Web Services uses F1 FPGAs, and autonomous systems incorporate many types of heterogeneous accelerators into their hardware. And this is not going to stop. In the future, it is expected that quantum computers will become accelerators that can be plugged into classical computers, and they will become a part of mainstream computing.”

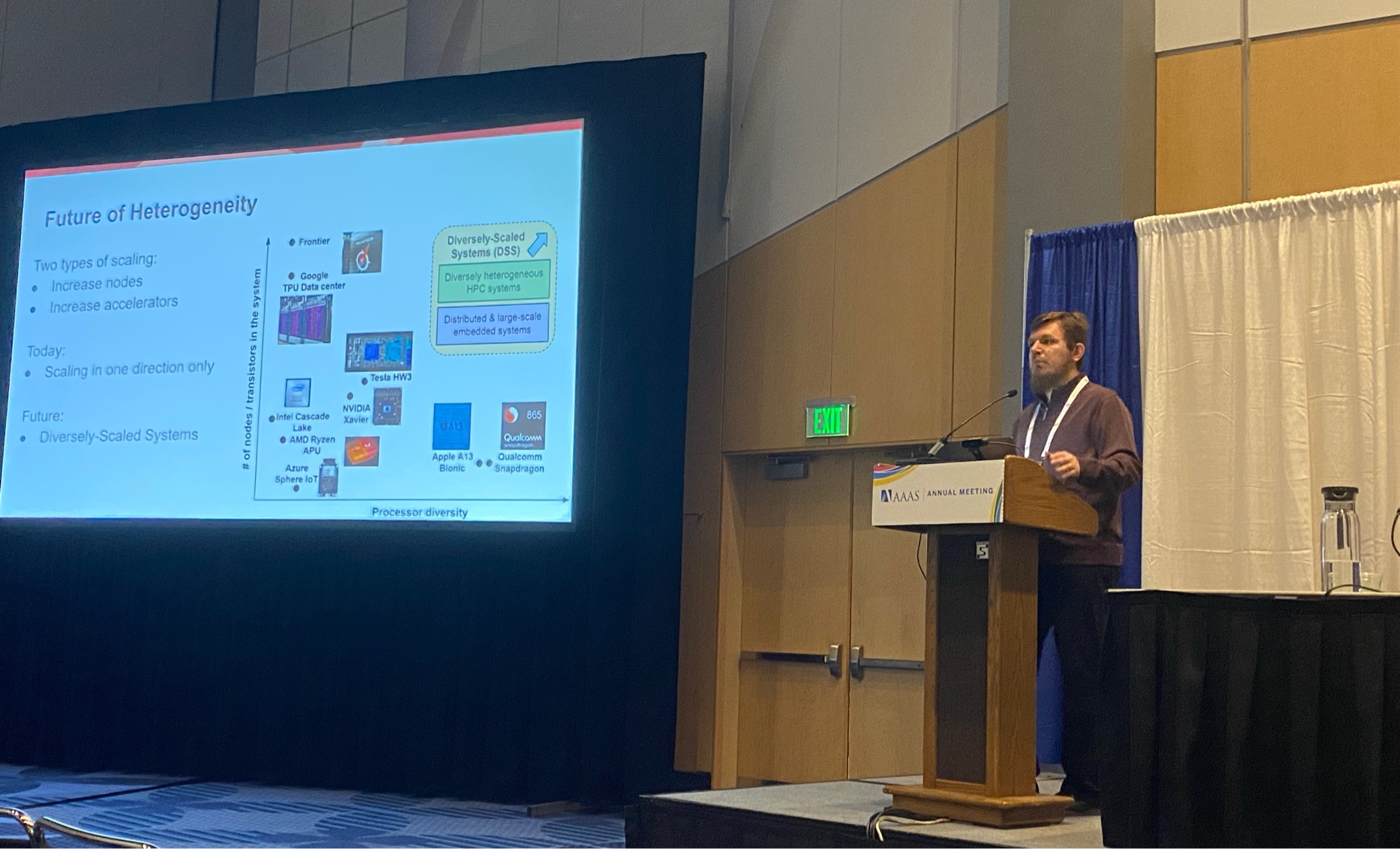

The figure below shows how accelerators can be divided into a diversity of scales. The Y axis of the graph below shows the increase in nodes all the way up to the level of current supercomputers, to meet computationally demanding loads, especially those in scientific research. The more nodes and servers there are in a system, the faster we can run these computations.

The X axis, on the other hand, displays chips that are used everywhere in the world, especially in smartphones. These are highly specialized devices that are unlike supercomputers, because rather than having many CPUs incorporated in them, they have many diverse accelerators. “Apple’s A12 System on Chip, for example, has more than 40 domain specific accelerators that are targeted to very specific tasks, such as 5G communication and biometric authentication. These domain specific accelerators increase the efficiency of your smart phones tremendously, and without them, your phone battery wouldn’t last even an hour.”

“In the near future, we expect to see progress scale in both directions on this graph”, said Dr. Belviranli. “We are expecting future supercomputers to have not only GPUs but also many types of accelerators. Mobile and autonomous systems will be connected and the computation will accumulate from these devices communicating, which will lead to some complex problems down the road.”

Dr. Belviranli displayed the figure below to highlight some of these future concerns. “If you want to run a convolution on the system displayed here, you can run it on four different processors (the Carmel CPU cores, Volta GPU, PVA, and DLA). But programming the same function on four different processors is not straightforward. There are too many ISAs (Instruction Set Architectures – how the CPU is controlled by the software) and APIs, and we often lack a universal programming abstraction to simplify this process. You can see in the chart in the bottom right hand side of the figure below, each processor has a completely different ISA, so anyone working on this system would need to understand all four ISAs and both APIs. Even if you manage to program all of these cores to run the same convolution, you will need to characterize the performance of each processor, using different units for different goals, like measuring energy efficiency or precision. Even if you manage to do that too, you have to factor in runtime to properly schedule and execute interchange between all of these systems. As you can see, the breadth of the computing expertise required to program these diverse systems separately is above the level most researchers possess, and above even most computer scientists.”

Dr. Belviranli then discussed some of his recent research, shown in the figure below. Belviranli and his collaborators ran a machine learning workload on multiple different systems. In the first system, the workload was run on only a GPU, and the resulting runtime was very low, but the execution was not very energy efficient. Conversely, Dr. Belviranli ran the same workload on only a DLA (Deep Learning Accelerator) and the runtime was longer, but the system was more energy efficient. Belviranli’s team then developed a system which utilized both processors to run the workload collaboratively, providing an optimal tradeoff between energy efficiency and runtime performance.

To enable science to use these diverse systems, we need to approach the problem from multiple directions. The figure below displays each of the six pillars that need to be considered when introducing these systems to new scientific disciplines. Dr. Belviranli highlighted “programming abstractions” as one of the most important considerations when creating these systems to be used in other scientific disciplines, because each researcher who will want to use these diverse systems will not have the knowledge of every ISA and API of every accelerator in a given system, and they shouldn’t have to. These systems should be made so that anyone with a computing background can tailor the systems to their own research needs.

Thank you so much for reading! Tomorrow, we will release the final recap of this series, which will detail the Q&A portion of this AAAS panel.