Joseph Sifakis discusses autonomous systems at HLF 2019

In Tuesday’s opening lecture at the Heidelberg Laureate Forum (HLF), Joseph Sifakis, 2007 Turing Award winner, discussed whether we can trust autonomous systems and considered the interplay between the trustworthiness of the system – the system’s ability to behave as expected despite mishaps – and the criticality of the task – the severity of the impact an error will have on the fulfillment of a task.

Sifakis defined autonomy as the combination of five complementary functions – perception, reflection, goal management, planning, and self-awareness/adaption. The better a given system can manage these functions the higher the level of autonomy we say that it has, from 0 (no automation) to 5 (full automation, no human required).

He also noted that historically the way that the aerospace and rail industries attempted to resolve the tension between criticality and trustworthiness is very different from the current standards in autonomous vehicles (AV). According to Sifakis:

- “AV manufacturers have not followed a “safety by design” concept: they adopt ‘black-box’ ML-enable end-to-end design approachesHe also noted that historically the way that the aerospace and rail industries attempted to resolve the tension between criticality and trustworthiness is very different from the current standards in autonomous vehicles (AV). According to Sifakis:

- AV manufacturers consider that statistical trustworthiness evidence is enough – ‘I’ve driven a hundred million miles without accident. Okay that means it’s safe.’

- Public authorities allow ‘self-certification’ for autonomous vehicles

- Critical software can be customized by updates – Tesla cars software may be updated on a monthly basis.’ ” Whereas aircraft software or hardware components cannot be modified.

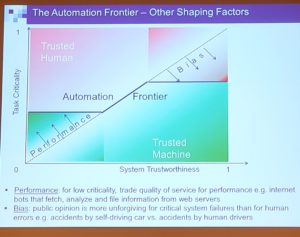

Shaping factors of the automation frontier

However, in order for society to truly trust autonomous vehicles scientist must offer greater levels of validation. Sifakis argued simulation offers a way to validate these systems without running them in the real world, but they are only as realistic as they are programmed to be. In order for simulation to truly provide a benefit, it must be realistic and offer semantic awareness, through exploring corner case scenarios and high risk situations.

We must also consider the human factors that shape the automation frontier. Generally human are more willing to trade quality of service for performance in low criticality situations, while human beings are less forgiving an of autonomous system failures that lead to serious accidents or deaths than they would be if that same event was caused by a human being.

Following the day’s lectures, all of the HLF participants took a trip to the Technik Museum Speyer for dinner. The museum was open for participants to explore: standout exhibits included many vintage cars, a large organ, the space shuttle BURAN, and a Boeing 747. The Boeing 747 was displayed about 4 stories in the air and your option to exit from that height included the stairs or a steep slide. If you ever have a chance to visit, I would definitely recommend taking the slide down.